May 25, 2024

•

8 min read

The Role of AI in Enhancing Multimodal Interfaces

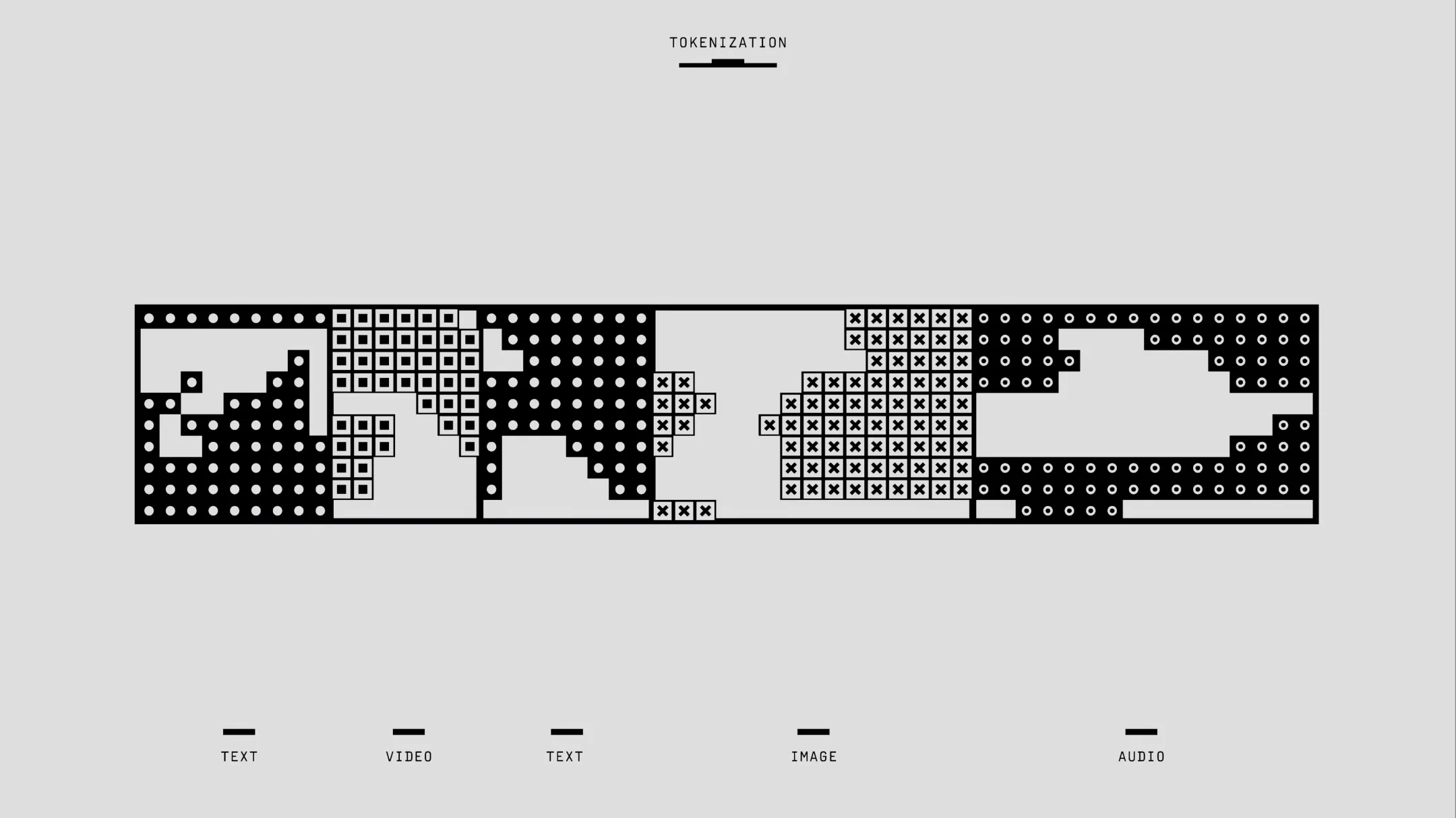

Multimodal interfaces, which integrate various forms of input and output like speech, touch, gesture, and vision, are revolutionizing the way we interact with technology. At the core of this transformation is artificial intelligence (AI), which enhances the functionality, responsiveness, and adaptability of these interfaces.

AI's Role in Enhancing Multimodal Interfaces

Improved Speech Recognition and NLP

AI significantly enhances speech recognition and natural language processing (NLP), enabling systems to understand and process conversational speech. This allows users to interact with devices naturally and receive contextually relevant responses. Advanced AI algorithms can handle various accents, dialects, and languages, improving accessibility for a broader range of users.

Advanced Computer Vision

AI-driven computer vision technologies are essential for interpreting visual inputs, such as facial expressions, gestures, and movements. By analyzing visual data, AI can detect and interpret user intentions, enhancing the interactivity and responsiveness of the interface. For instance, gesture recognition allows users to control devices through hand movements, while facial recognition can personalize user experiences and improve security.

Contextual Awareness and Adaptability

AI provides contextual awareness, enabling systems to adapt to the user's environment and behavior. Contextual AI can consider factors such as location, time of day, user activity, and historical interactions to deliver personalized and timely responses. This adaptability ensures that the interface remains relevant and useful in varying circumstances, significantly improving user satisfaction and efficiency.

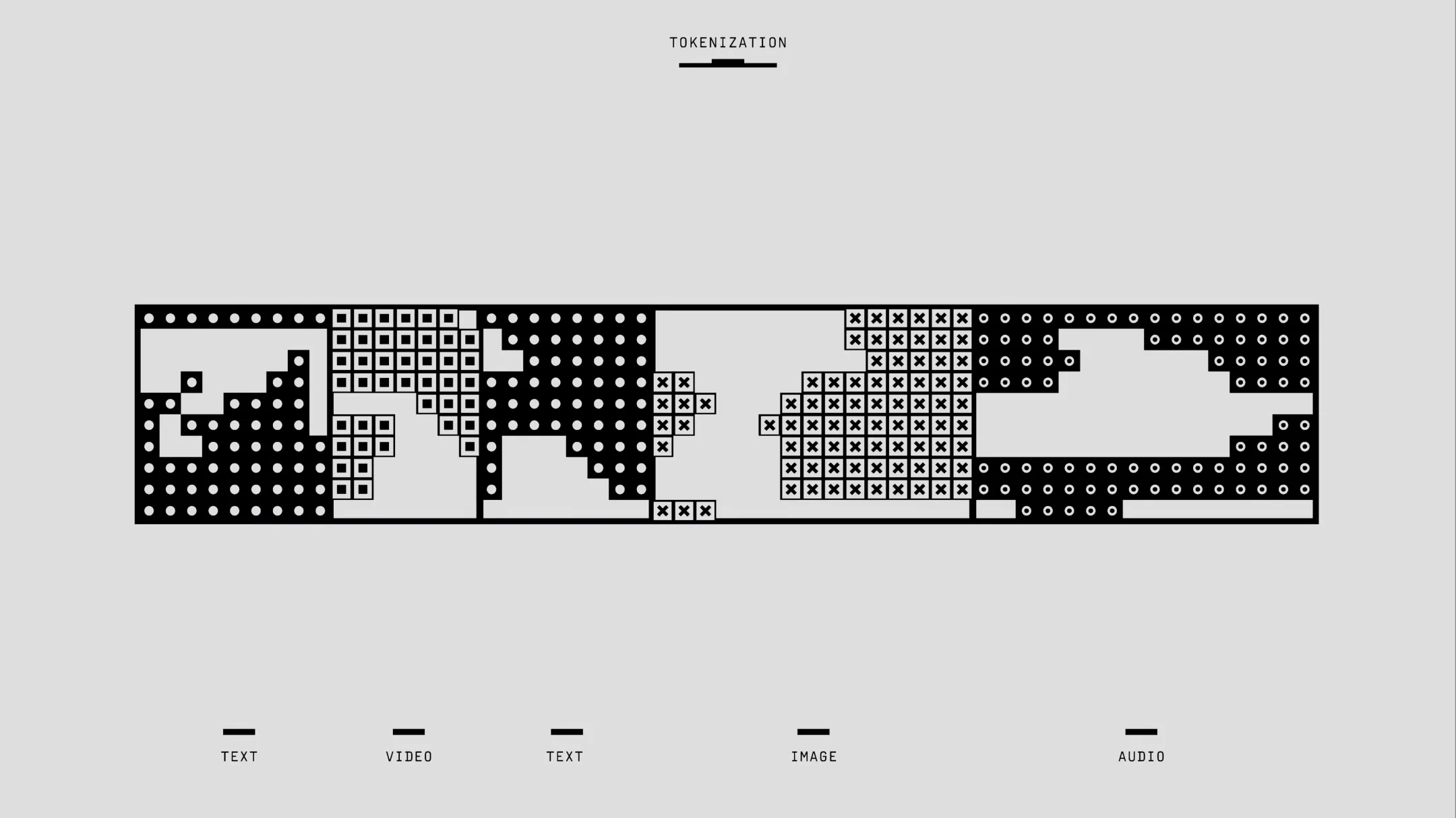

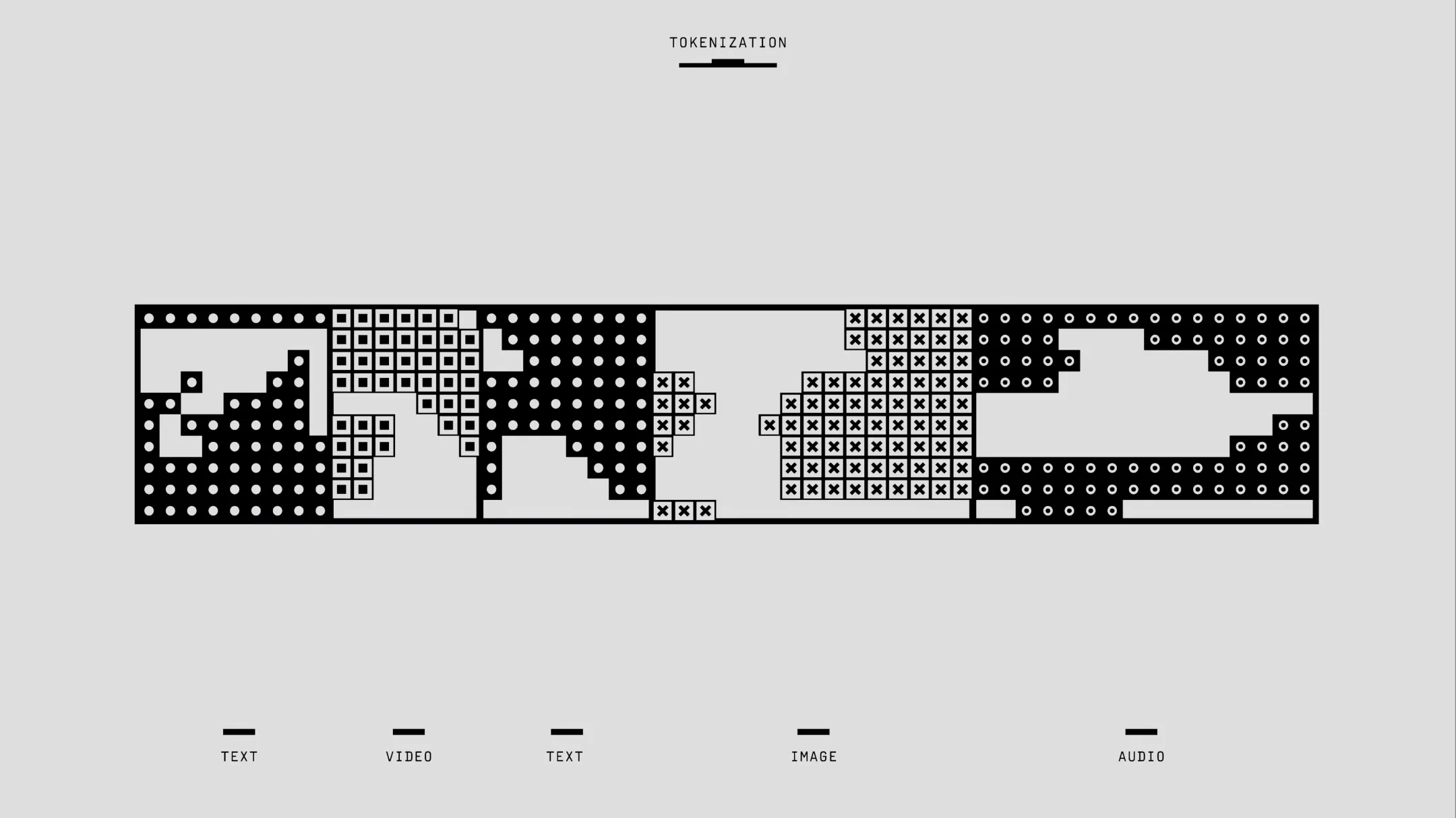

Integration of Multiple Modalities

AI plays a critical role in the integration of multiple modalities, enabling systems to process and combine information from different sources simultaneously. This multimodal fusion is essential for creating a cohesive and seamless user experience. For example, in a smart home environment, AI can combine voice commands with gesture recognition and visual context to control devices more effectively. This integration allows for more complex and nuanced interactions, surpassing the capabilities of single-modality systems.

Learning and Personalization

Machine learning algorithms allow multimodal interfaces to learn from user interactions and improve over time. By analyzing user behavior and preferences, AI can tailor the interface to meet individual needs, offering personalized recommendations and automating routine tasks. This continuous learning process enhances the usability and efficiency of the interface, making it more intuitive and aligned with user expectations.

Practical Applications of AI-Enhanced Multimodal Interfaces

Healthcare

In healthcare, AI-enhanced multimodal interfaces can revolutionize patient care and medical workflows. Voice-controlled assistants can help physicians access patient records and document consultations hands-free, while computer vision can monitor patient conditions and detect abnormalities in real-time. This integration reduces the cognitive load on healthcare professionals and improves patient outcomes. For instance, AI can assist in diagnosing diseases by analyzing medical images and patient data, allowing for more accurate and timely interventions.

Education

In educational settings, multimodal interfaces can create more engaging and interactive learning experiences. AI can facilitate personalized learning by adapting to each student's pace and style. For example, speech recognition can assist language learners with pronunciation, while gesture recognition can enable hands-on learning activities. Additionally, AI can provide real-time feedback and support to students, helping them overcome challenges and improve their skills.

Smart Homes and IoT

AI-driven multimodal interfaces are at the core of smart home technologies, allowing users to control their environment through voice, touch, and gestures. By integrating multiple modalities, AI can create a more responsive and intuitive home automation system, enhancing comfort, convenience, and security. For example, AI can recognize when a user enters a room and automatically adjust lighting, temperature, and other settings based on their preferences.

Automotive Industry

In the automotive industry, multimodal interfaces enhance driver safety and experience by combining voice commands, touch controls, and visual displays. AI can interpret driver behavior and environmental conditions, providing relevant information and assistance while minimizing distractions. For instance, AI can monitor the driver’s eye movements and alert them if they appear drowsy or distracted, improving overall road safety.

Challenges and Future Directions

While AI-enhanced multimodal interfaces offer numerous benefits, they also present several challenges. Ensuring accuracy and reliability across different modalities requires sophisticated algorithms and substantial computational power. Privacy and security concerns must be addressed, particularly when dealing with sensitive data such as voice and facial recognition. Additionally, designing interfaces that are universally accessible and inclusive remains a critical consideration.

Looking ahead, the future of multimodal interfaces will likely see even greater integration of AI capabilities. Advances in machine learning, deep learning, and neural networks will further improve the accuracy, adaptability, and personalization of these systems. As AI continues to evolve, multimodal interfaces will become even more seamless, intuitive, and integral to our daily lives.

Conclusion

AI is fundamentally transforming multimodal interfaces, making interactions with technology more natural, intuitive, and efficient. By enhancing speech recognition, computer vision, contextual awareness, and personalization, AI enables the creation of interfaces that cater to the diverse needs and preferences of users. As technology continues to advance, AI-driven multimodal interfaces will play an increasingly important role in various domains, from healthcare and education to smart homes and automotive systems, paving the way for a more connected and intelligent world.

AI's Role in Enhancing Multimodal Interfaces

Improved Speech Recognition and NLP

AI significantly enhances speech recognition and natural language processing (NLP), enabling systems to understand and process conversational speech. This allows users to interact with devices naturally and receive contextually relevant responses. Advanced AI algorithms can handle various accents, dialects, and languages, improving accessibility for a broader range of users.

Advanced Computer Vision

AI-driven computer vision technologies are essential for interpreting visual inputs, such as facial expressions, gestures, and movements. By analyzing visual data, AI can detect and interpret user intentions, enhancing the interactivity and responsiveness of the interface. For instance, gesture recognition allows users to control devices through hand movements, while facial recognition can personalize user experiences and improve security.

Contextual Awareness and Adaptability

AI provides contextual awareness, enabling systems to adapt to the user's environment and behavior. Contextual AI can consider factors such as location, time of day, user activity, and historical interactions to deliver personalized and timely responses. This adaptability ensures that the interface remains relevant and useful in varying circumstances, significantly improving user satisfaction and efficiency.

Integration of Multiple Modalities

AI plays a critical role in the integration of multiple modalities, enabling systems to process and combine information from different sources simultaneously. This multimodal fusion is essential for creating a cohesive and seamless user experience. For example, in a smart home environment, AI can combine voice commands with gesture recognition and visual context to control devices more effectively. This integration allows for more complex and nuanced interactions, surpassing the capabilities of single-modality systems.

Learning and Personalization

Machine learning algorithms allow multimodal interfaces to learn from user interactions and improve over time. By analyzing user behavior and preferences, AI can tailor the interface to meet individual needs, offering personalized recommendations and automating routine tasks. This continuous learning process enhances the usability and efficiency of the interface, making it more intuitive and aligned with user expectations.

Practical Applications of AI-Enhanced Multimodal Interfaces

Healthcare

In healthcare, AI-enhanced multimodal interfaces can revolutionize patient care and medical workflows. Voice-controlled assistants can help physicians access patient records and document consultations hands-free, while computer vision can monitor patient conditions and detect abnormalities in real-time. This integration reduces the cognitive load on healthcare professionals and improves patient outcomes. For instance, AI can assist in diagnosing diseases by analyzing medical images and patient data, allowing for more accurate and timely interventions.

Education

In educational settings, multimodal interfaces can create more engaging and interactive learning experiences. AI can facilitate personalized learning by adapting to each student's pace and style. For example, speech recognition can assist language learners with pronunciation, while gesture recognition can enable hands-on learning activities. Additionally, AI can provide real-time feedback and support to students, helping them overcome challenges and improve their skills.

Smart Homes and IoT

AI-driven multimodal interfaces are at the core of smart home technologies, allowing users to control their environment through voice, touch, and gestures. By integrating multiple modalities, AI can create a more responsive and intuitive home automation system, enhancing comfort, convenience, and security. For example, AI can recognize when a user enters a room and automatically adjust lighting, temperature, and other settings based on their preferences.

Automotive Industry

In the automotive industry, multimodal interfaces enhance driver safety and experience by combining voice commands, touch controls, and visual displays. AI can interpret driver behavior and environmental conditions, providing relevant information and assistance while minimizing distractions. For instance, AI can monitor the driver’s eye movements and alert them if they appear drowsy or distracted, improving overall road safety.

Challenges and Future Directions

While AI-enhanced multimodal interfaces offer numerous benefits, they also present several challenges. Ensuring accuracy and reliability across different modalities requires sophisticated algorithms and substantial computational power. Privacy and security concerns must be addressed, particularly when dealing with sensitive data such as voice and facial recognition. Additionally, designing interfaces that are universally accessible and inclusive remains a critical consideration.

Looking ahead, the future of multimodal interfaces will likely see even greater integration of AI capabilities. Advances in machine learning, deep learning, and neural networks will further improve the accuracy, adaptability, and personalization of these systems. As AI continues to evolve, multimodal interfaces will become even more seamless, intuitive, and integral to our daily lives.

Conclusion

AI is fundamentally transforming multimodal interfaces, making interactions with technology more natural, intuitive, and efficient. By enhancing speech recognition, computer vision, contextual awareness, and personalization, AI enables the creation of interfaces that cater to the diverse needs and preferences of users. As technology continues to advance, AI-driven multimodal interfaces will play an increasingly important role in various domains, from healthcare and education to smart homes and automotive systems, paving the way for a more connected and intelligent world.

AI's Role in Enhancing Multimodal Interfaces

Improved Speech Recognition and NLP

AI significantly enhances speech recognition and natural language processing (NLP), enabling systems to understand and process conversational speech. This allows users to interact with devices naturally and receive contextually relevant responses. Advanced AI algorithms can handle various accents, dialects, and languages, improving accessibility for a broader range of users.

Advanced Computer Vision

AI-driven computer vision technologies are essential for interpreting visual inputs, such as facial expressions, gestures, and movements. By analyzing visual data, AI can detect and interpret user intentions, enhancing the interactivity and responsiveness of the interface. For instance, gesture recognition allows users to control devices through hand movements, while facial recognition can personalize user experiences and improve security.

Contextual Awareness and Adaptability

AI provides contextual awareness, enabling systems to adapt to the user's environment and behavior. Contextual AI can consider factors such as location, time of day, user activity, and historical interactions to deliver personalized and timely responses. This adaptability ensures that the interface remains relevant and useful in varying circumstances, significantly improving user satisfaction and efficiency.

Integration of Multiple Modalities

AI plays a critical role in the integration of multiple modalities, enabling systems to process and combine information from different sources simultaneously. This multimodal fusion is essential for creating a cohesive and seamless user experience. For example, in a smart home environment, AI can combine voice commands with gesture recognition and visual context to control devices more effectively. This integration allows for more complex and nuanced interactions, surpassing the capabilities of single-modality systems.

Learning and Personalization

Machine learning algorithms allow multimodal interfaces to learn from user interactions and improve over time. By analyzing user behavior and preferences, AI can tailor the interface to meet individual needs, offering personalized recommendations and automating routine tasks. This continuous learning process enhances the usability and efficiency of the interface, making it more intuitive and aligned with user expectations.

Practical Applications of AI-Enhanced Multimodal Interfaces

Healthcare

In healthcare, AI-enhanced multimodal interfaces can revolutionize patient care and medical workflows. Voice-controlled assistants can help physicians access patient records and document consultations hands-free, while computer vision can monitor patient conditions and detect abnormalities in real-time. This integration reduces the cognitive load on healthcare professionals and improves patient outcomes. For instance, AI can assist in diagnosing diseases by analyzing medical images and patient data, allowing for more accurate and timely interventions.

Education

In educational settings, multimodal interfaces can create more engaging and interactive learning experiences. AI can facilitate personalized learning by adapting to each student's pace and style. For example, speech recognition can assist language learners with pronunciation, while gesture recognition can enable hands-on learning activities. Additionally, AI can provide real-time feedback and support to students, helping them overcome challenges and improve their skills.

Smart Homes and IoT

AI-driven multimodal interfaces are at the core of smart home technologies, allowing users to control their environment through voice, touch, and gestures. By integrating multiple modalities, AI can create a more responsive and intuitive home automation system, enhancing comfort, convenience, and security. For example, AI can recognize when a user enters a room and automatically adjust lighting, temperature, and other settings based on their preferences.

Automotive Industry

In the automotive industry, multimodal interfaces enhance driver safety and experience by combining voice commands, touch controls, and visual displays. AI can interpret driver behavior and environmental conditions, providing relevant information and assistance while minimizing distractions. For instance, AI can monitor the driver’s eye movements and alert them if they appear drowsy or distracted, improving overall road safety.

Challenges and Future Directions

While AI-enhanced multimodal interfaces offer numerous benefits, they also present several challenges. Ensuring accuracy and reliability across different modalities requires sophisticated algorithms and substantial computational power. Privacy and security concerns must be addressed, particularly when dealing with sensitive data such as voice and facial recognition. Additionally, designing interfaces that are universally accessible and inclusive remains a critical consideration.

Looking ahead, the future of multimodal interfaces will likely see even greater integration of AI capabilities. Advances in machine learning, deep learning, and neural networks will further improve the accuracy, adaptability, and personalization of these systems. As AI continues to evolve, multimodal interfaces will become even more seamless, intuitive, and integral to our daily lives.

Conclusion

AI is fundamentally transforming multimodal interfaces, making interactions with technology more natural, intuitive, and efficient. By enhancing speech recognition, computer vision, contextual awareness, and personalization, AI enables the creation of interfaces that cater to the diverse needs and preferences of users. As technology continues to advance, AI-driven multimodal interfaces will play an increasingly important role in various domains, from healthcare and education to smart homes and automotive systems, paving the way for a more connected and intelligent world.

May 25, 2024

•

8 min read

Share Article

May 25, 2024

•

8 min read

Share Article

May 25, 2024

•

8 min read

Share Article